ainer\">

CUDA的存储器可以大致分为两类:

- 板载显存(On-board memory)

- 片上内存(On-chip memory)

其中板载显存主要包括全局内存(global memory)、本地内存(local memory)、常量内存(constant memory)、纹理内存(texture memory)等,片上内存主要包括寄存器(register)和共享内存(shared memory)。不同类型的内存有各自不同的特点,不过片上内存通常比板载显存要快,而寄存器又是所有存储种类中最快的。本文我们着重介绍共享内存的基础知识以及应用例子。

01

查看自己显卡上的共享内存信息

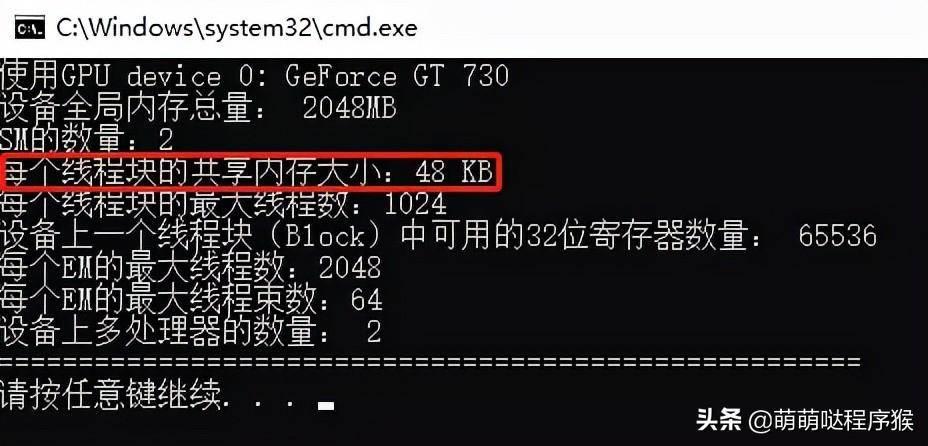

CUDA提供了cudaGetDeviceCount和cudaGetDeviceProperties这两个函数,分别用于获取CUDA设备数、获取CUDA设备属性,通过调用这两个函数,可以方便获取共享内存信息和其它CUDA设备信息:

<span>//显示CUDA设备信息</span><span><span>void</span> <span>show_GPU_info</span><span>(<span>void</span>)</span></span>{ <span>int</span> deviceCount; <span>//获取CUDA设备总数</span> cudaGetDeviceCount(&deviceCount); <span>//分别获取每个CUDA设备的信息</span> <span>for</span>(<span>int</span> i=<span>0</span>;i<deviceCount;i++) { <span>//定义存储信息的结构体</span> cudaDeviceProp devProp; <span>//将第i个CUDA设备的信息写入结构体中</span> cudaGetDeviceProperties(&devProp, i); <span>std</span>::<span>cout</span> << <span>\"使用GPU device \"</span> << i << <span>\": \"</span> << devProp.name << <span>std</span>::<span>endl</span>; <span>std</span>::<span>cout</span> << <span>\"设备全局内存总量:\"</span> << devProp.totalGlobalMem / <span>1024</span> / <span>1024</span> << <span>\"MB\"</span> << <span>std</span>::<span>endl</span>; <span>std</span>::<span>cout</span> << <span>\"SM的数量:\"</span> << devProp.multiProcessorCount << <span>std</span>::<span>endl</span>; <span>std</span>::<span>cout</span> << <span>\"每个线程块的共享内存大小:\"</span> << devProp.sharedMemPerBlock / <span>1024.0</span> << <span>\" KB\"</span> << <span>std</span>::<span>endl</span>; <span>std</span>::<span>cout</span> << <span>\"每个线程块的最大线程数:\"</span> << devProp.maxThreadsPerBlock << <span>std</span>::<span>endl</span>; <span>std</span>::<span>cout</span> << <span>\"设备上一个线程块(Block)中可用的32位寄存器数量: \"</span> << devProp.regsPerBlock << <span>std</span>::<span>endl</span>; <span>std</span>::<span>cout</span> << <span>\"每个EM的最大线程数:\"</span> << devProp.maxThreadsPerMultiProcessor << <span>std</span>::<span>endl</span>; <span>std</span>::<span>cout</span> << <span>\"每个EM的最大线程束数:\"</span> << devProp.maxThreadsPerMultiProcessor / <span>32</span> << <span>std</span>::<span>endl</span>; <span>std</span>::<span>cout</span> << <span>\"设备上多处理器的数量:\"</span> << devProp.multiProcessorCount << <span>std</span>::<span>endl</span>; <span>std</span>::<span>cout</span> << <span>\"======================================================\"</span> << <span>std</span>::<span>endl</span>; }}运行以上函数,得到共享内存信息以及其它设备信息,如下图所示,本人使用的显卡上,针对于每一个线程块,其可以使用的最大共享内存为48 KB。

02

共享内存的特性

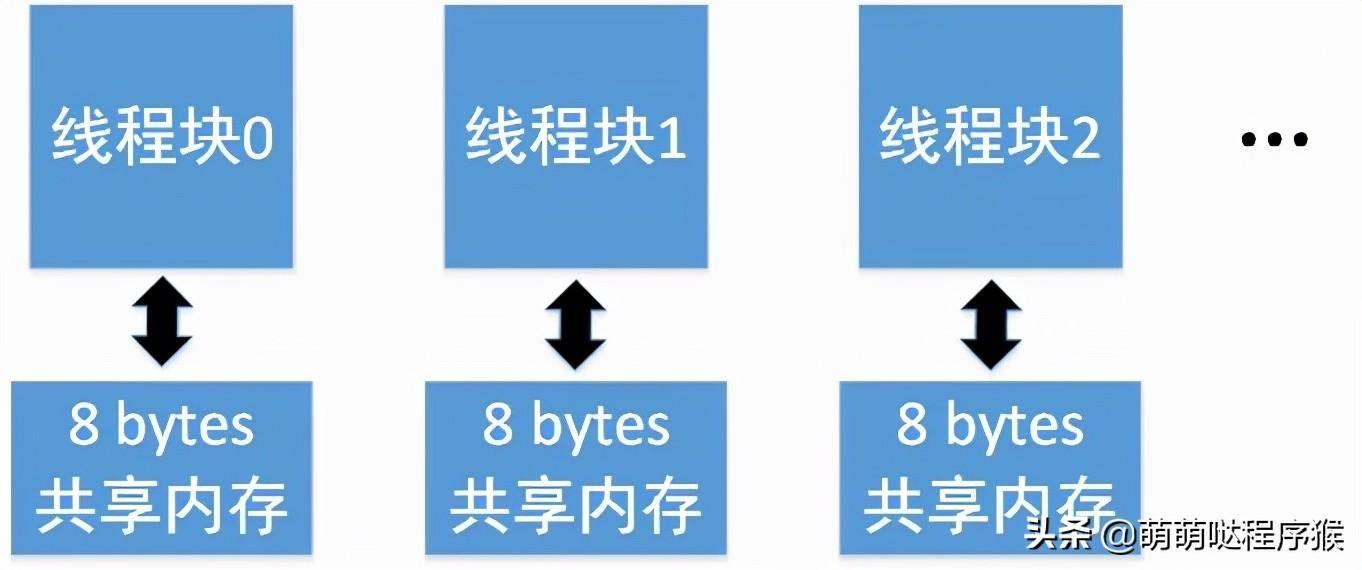

共享内存的主要特点在于“共享”,也即同一个线程块中的所有线程都可以对这一块存储进行读写操作,所以“共享”是针对同一个线程块中所有线程而言的。一旦共享内存被定义并指定大小,系统将给所有线程块都分配相同大小的共享内存,比如定义一个大小为8 bytes的unsigned char型共享内存,那么所有线程块都会被分配一个8 bytes的unsigned char型共享内存。如下图所示:

共享内存在CUDA核函数中定义,通常有两种方式:静态方式、动态方式。

- 静态方式定义。这种方式定义的特点是定义的同时指定大小:

__<span>global__ <span>shared_memory_kernel</span><span>(uchar *inputs, <span>int</span> row, <span>int</span> col)</span></span>{ <span>int</span> x = threadIdx.x + blockDim.x * blockIdx.x; <span>//col</span> <span>int</span> y = threadIdx.y + blockDim.y * blockIdx.y; <span>//row</span> <span>if</span> (x < col && y < row) { __shared__ uchar s[<span>8</span>]; <span>//定义的同时指定大小为8 bytes,因此每个线程块都被分配8 bytes的共享内存</span> . . . }}- 动态方式定义。此方式特点为定义的时候不指定大小,在调用核函数的时候将共享内存大小以输入参数的形式传入。

__<span>global__ <span>shared_memory_kernel</span><span>(uchar *inputs, <span>int</span> row, <span>int</span> col)</span></span>{ <span>int</span> x = threadIdx.x + blockDim.x * blockIdx.x; <span>//col</span> <span>int</span> y = threadIdx.y + blockDim.y * blockIdx.y; <span>//row</span> <span>if</span> (x < col && y < row) { <span>extern</span> __shared__ uchar s[]; <span>//定义的时候不指定大小</span> . . . }}<span><span>void</span> <span>shared_memory_test</span><span>(<span>void</span>)</span></span>{ . . . <span>//传入的第1个参数block_num为线程块总数</span> <span>//第2个参数thread_num为每个线程块包含的线程数</span> <span>//第3个参数8为共享内存大小,所以动态共享内存大小通过第3个参数传入</span> shared_memory_kernel<<<block_num, thread_num, <span>8</span>>>>(inputs, row, col); . . .}需要注意:动态定义共享内存时,调用核函数传入的数值必须以字节byte为单位,所以如果定义的共享内存不是byte类型,数值必须乘以类型占用的字节数。比如要动态定义长度为8的float类型共享内存,那么传入核函数的数值为8*sizeof(float)。

shared_memory_kernel<<<block_num, thread_num, <span>8</span> * <span>sizeof</span>(<span>float</span>)>>>(inputs, row, col);03

共享内存的应用例子

前文我们讲的数组元素规约求和算法,使用CUDA全局内存来存储数据:

CUDA加速——基于规约思想的数组元素求和

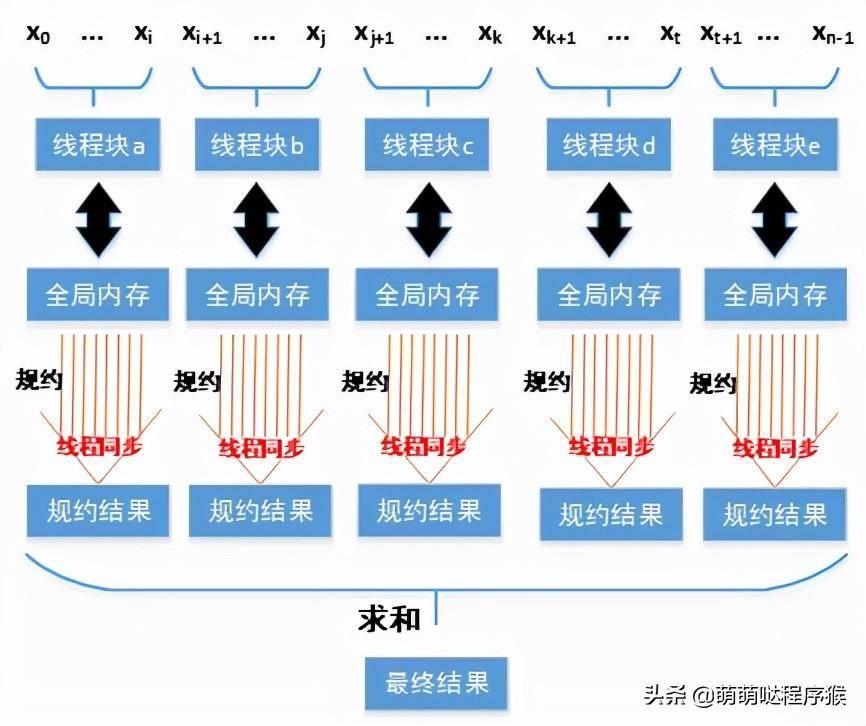

我们知道全局内存属于板载显存,而共享内存属于片上内存,因此共享内存的读写速度比全局内存快得多。在前文代码的核函数中有个for循环需要多次读写全局内存,全局内存本身就很慢,而且如果不是连续访问会更慢,因此本文我们尝试使用共享内存来代替全局内存实现前文讲的规约求和算法。

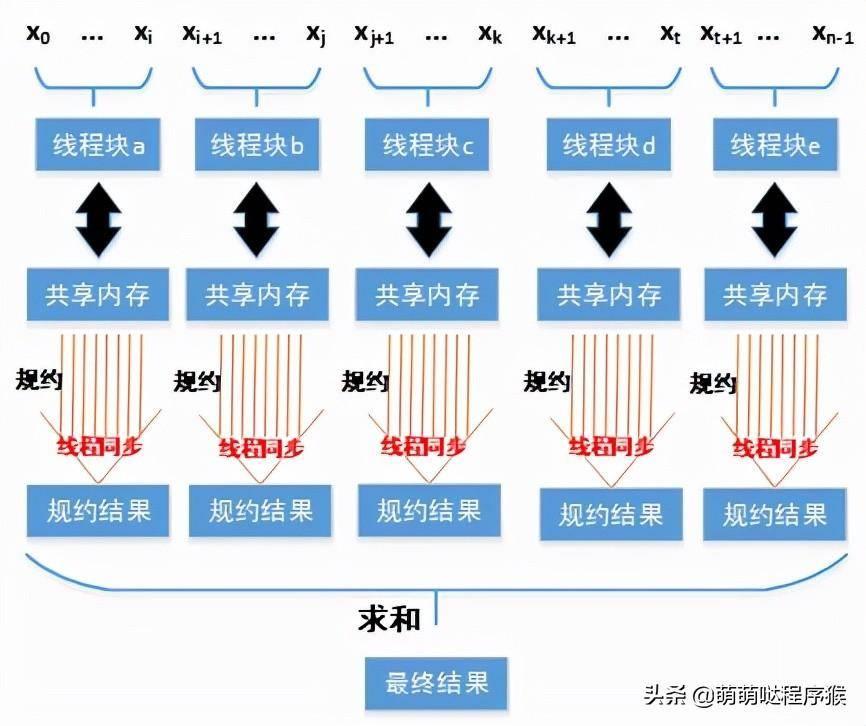

由于前文的规约算法是在不同线程块分别进行的,而共享内存又具有线程块内共享的特性,故共享内存正好适合此应用场景。

前文的规约结构

本文使用共享内存的规约结构

下面我们比较使用共享内存的核函数与前文使用全局内存的核函数:

<span>//使用全局内存</span>__<span>global__ <span>void</span> <span>cal_sum_ker0</span><span>(<span>float</span> *Para, <span>float</span> *blocksum_cuda)</span></span>{ <span>int</span> tid = blockIdx.x * blockDim.x + threadIdx.x; <span>if</span>(tid < N) { <span>for</span> (<span>int</span> index = <span>1</span>; index < blockDim.x; index = (index*<span>2</span>)) { <span>if</span> (threadIdx.x % (index*<span>2</span>) == <span>0</span>) { Para[tid] += Para[tid + index]; } __syncthreads(); <span>//同步,以防止归约过程中某个线程运行速度过快导致计算错误</span> } <span>if</span>(threadIdx.x == <span>0</span>) <span>//整个数组相加完成后,将共享内存数组0号元素的值赋给全局内存数组0号元素 </span> blocksum_cuda[blockIdx.x] = Para[tid]; }}<span>//使用共享内存</span><span>//blockIdx.x为线程块的ID号</span><span>//blockDim.x每个线程块中包含的线程总个数</span><span>//threadIdx.x为每个线程块中的线程ID号</span>__<span>global__ <span>void</span> <span>cal_sum_ker</span><span>(<span>float</span> *Para, <span>float</span> *blocksum_cuda)</span></span>{ <span>int</span> tid = blockIdx.x * blockDim.x + threadIdx.x; <span>if</span>(tid < N) { <span>//动态方式定义float型共享内存</span> <span>extern</span> __shared__ <span>float</span> s_Para[]; <span>//线程块中的每个线程负责把其对应的数据从全局内存加载到共享内存</span> s_Para[threadIdx.x] = Para[tid]; __syncthreads(); <span>//块内线程同步,等待线程块内所有线程加载数据完毕 </span> <span>for</span> (<span>int</span> index = <span>1</span>; index < blockDim.x; index = (index*<span>2</span>)) { <span>if</span> (threadIdx.x % (index*<span>2</span>) == <span>0</span>) { <span>//在for循环中使用共享内存实现规约,避免频繁读写全局内存</span> s_Para[threadIdx.x] += s_Para[threadIdx.x + index]; } __syncthreads(); <span>//块内线程同步,以防止归约过程中某个线程运行速度过快导致计算错误</span> } <span>if</span>(threadIdx.x == <span>0</span>) <span>//将共享内存数组0号元素的值赋给全局内存数组</span> blocksum_cuda[blockIdx.x] = s_Para[threadIdx.x]; }}接着在测试代码中分别调用上方两个核函数。调用时指定共享内存的长度为每个线程块包含的线程数:

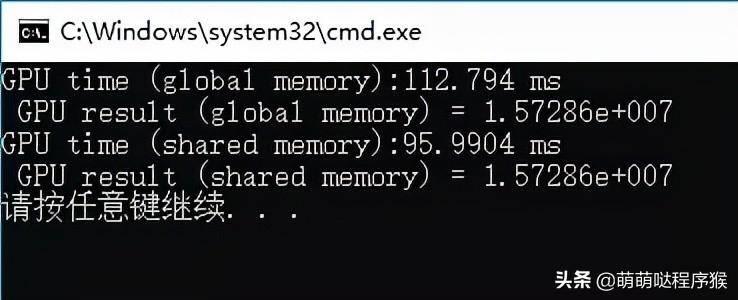

<span><span>void</span> <span>cal_sum_test</span>()</span> { Timer_Us2 timer; <span>//定义CPU端数组</span> <span>float</span> *test_d = (<span>float</span> *)malloc(N * <span>sizeof</span>(<span>float</span>)); <span>for</span> (<span>long</span> <span>long</span> i = <span>0</span>; i < N; i++) { test_d[i] = <span>0.5</span>; } <span>dim3 <span>sumblock</span>(<span><span>512</span></span>)</span>;<span>//设置每个线程块有512个线程</span> <span>dim3 <span>sumgrid</span>(<span>((N%sumblock.x</span>) ? (<span>N/sumblock.x + <span>1</span></span>) : (<span>N/sumblock.x</span>)))</span>; <span>float</span> *test_d_cuda; <span>float</span> *blocksum_cuda; <span>float</span> *blocksum_host = (<span>float</span> *)malloc(<span>sizeof</span>(<span>float</span>) * sumgrid.x); cudaMalloc((<span>void</span> **)&test_d_cuda, <span>sizeof</span>(<span>float</span>) * N); cudaMalloc((<span>void</span> **)&blocksum_cuda, <span>sizeof</span>(<span>float</span>) * sumgrid.x); timer.start_timer(); <span>//将数据从CPU端拷贝到GPU端</span> cudaMemcpy(test_d_cuda, test_d, <span>sizeof</span>(<span>float</span>) * N, cudaMemcpyHostToDevice); <span>//调用使用全局内存规约的核函数</span> cal_sum_ker0 << > > (test_d_cuda, blocksum_cuda); <span>//将所有线程块的规约结果从GPU端拷贝到CPU端</span> cudaMemcpy(blocksum_host, blocksum_cuda, <span>sizeof</span>(<span>float</span>) * sumgrid.x, cudaMemcpyDeviceToHost); <span>//在CPU端对所有线程块的规约求和结果做串行求和</span> <span>double</span> sum = <span>0.0</span>; <span>for</span>(<span>int</span> i = <span>0</span>; i < sumgrid.x; i++) { sum += blocksum_host[i]; } timer.stop_timer(<span>\"GPU time (global memory):\"</span>); cout << <span>\" GPU result (global memory) = \"</span> << sum << endl; <span>//显示GPU端结果</span> <span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span><span>///</span>/</span> timer.start_timer(); cudaMemcpy(test_d_cuda, test_d, <span>sizeof</span>(<span>float</span>) * N, cudaMemcpyHostToDevice); <span>//调用使用共享内存规约的核函数,sumblock.x为每个线程块包含的线程数,sumblock.x * sizeof(float)就是传入的共享内存字节数</span> cal_sum_ker << < sumgrid, sumblock, sumblock.x * <span>sizeof</span>(<span>float</span>) >> > (test_d_cuda, blocksum_cuda); cudaMemcpy(blocksum_host, blocksum_cuda, <span>sizeof</span>(<span>float</span>) * sumgrid.x, cudaMemcpyDeviceToHost); sum = <span>0.0</span>; <span>for</span>(<span>int</span> i = <span>0</span>; i < sumgrid.x; i++) { sum += blocksum_host[i]; } timer.stop_timer(<span>\"GPU time (shared memory):\"</span>); cout << <span>\" GPU result (shared memory) = \"</span> << sum << endl; <span>//显示GPU端结果</span> cudaFree(test_d_cuda); cudaFree(blocksum_cuda); free(blocksum_host); free(test_d);} 运行结果如下,可以看到使用共享内存之后,耗时减少了,这是因为共享内存的读写效率比全局内存高。